October 26, 2023

Blog

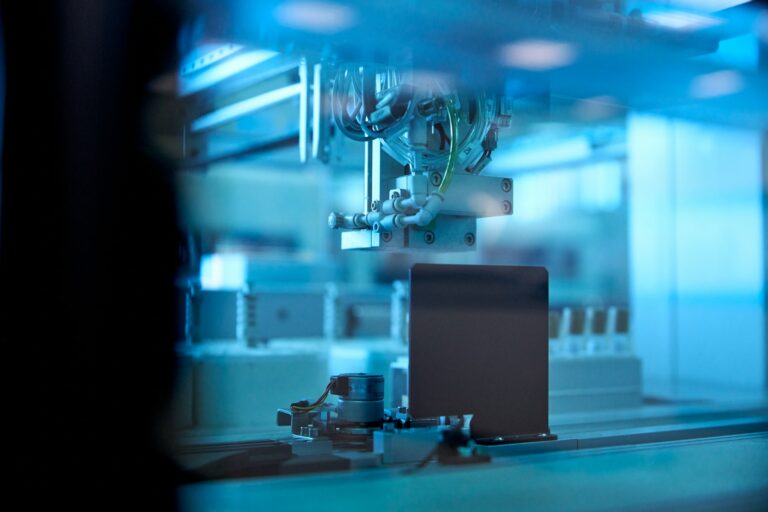

12 Significant Machine Learning Algorithms in Manufacturing You Need to Know in 2023

For decades, great minds in science and technology have labored to advance their research in AI, specifically in the domain of machine learning (ML). Recent breakthroughs are assisting companies in reaching new levels of efficiency and growth by implementing ML across various operations.

From healthcare to finance, education to agriculture: ML is helping pioneers of industry make revolutionary leaps forward in their respective fields.

We’ll take a look at 12 specific machine learning algorithms in manufacturing and the substantial ways they are changing operations for the better.

How Is Machine Learning Used in Manufacturing?

Machine learning is indispensable for increasing efficiency in manufacturing plants. It is implemented in multiple ways to reduce human fatigue often symptomatic of the grueling labor that accompanies repetitive processes, and vastly improves scalable outcomes.

Machine learning AI algorithms are widely adopted in manufacturing for:

-

- 1. Quality control

- 2. Automation

- 3. Assembly

- 4. Defect detection

- 5. Predictive maintenance

Machine Learning in Smart Manufacturing Facilities

ML is instrumental in modernizing facilities so that every stage of manufacturing can be optimized for refined performance. The following are specialized ways ML is transforming manufacturing for the better.

Supply Chain Optimization

ML optimizes supply chain processes to and from the factory by forecasting stock and inventory management requirements, as well as facilitating transportation logistics by fine-tuning shipping routes.

Predictive Maintenance

Where traditional machine maintenance is performed according to a predefined schedule, ML predictive maintenance uses numerous data points and feedback analysis to identify potential component failures before they disrupt operations.

Inspection and Monitoring

ML-based computer vision and pre-trained pattern-recognition tools are used to inspect materials and products throughout each phase of production, identifying potential anomalies or damaged items with precision and consistency.

Quality Control

ML-powered root cause analysis (RCA) is deployed to determine the source of inaccuracies in order to improve the process, helping factories reduce process-driven production loss and unnecessary downtime.

Generative Design

With generative design, ML discriminator and generator models analyze and refine engineered designs by integrating tools like 3D CAD, simulations, and optimization software. This improves efficiency and speeds up design quality and iteration increasingly over time.

What Are the 4 Primary Machine Learning Algorithm Categories?

There are numerous applicable ML algorithms that serve different purposes, all falling under one of four main categories.

1. Supervised

Supervised ML uses human-labeled input and output data to train the algorithm to recognize patterns and develop the ability to make accurate predictions for new data.

2. Unsupervised

Unsupervised ML involves algorithms that analyze unlabeled data to uncover patterns, structures, or relationships without being trained on any predefined outcome targets.

3. Semi-Supervised

Semi-supervised ML combines labeled and unlabeled data to improve model performance, using labeled data to help guide the algorithm’s understanding of unlabeled inputs.

4. Reinforcement Learning

In reinforcement learning, ML algorithms learn to make sequential decisions by acting in a controlled environment. OpenAI’s widely-recognized ChatGPT is likely the most notable example of a highly successful project that makes use of reinforcement learning. In this case, OpenAI uses reinforcement learning from human feedback (RLHF) that synthesizes human-legible, guided training with rewards-based algorithms to produce continuously improving results.

This field may seem open-ended, but in all cases, the algorithm is directed by rewards or penalties to develop an understanding of rules, intent, and even complex logic. Reinforcement learning is used for classification, regression, clustering, and other common learning tasks.

12 Important Machine Learning Algorithms Currently Deployed in Manufacturing

The twelve machine learning algorithms in manufacturing below illuminate how different ML methods are used for unique, highly specialized purposes. Each of the following types of machine learning algorithms falls under one of the four categories detailed above.

1. Linear Regression (Supervised)

Linear regression is used to model the relationship between a dependent variable and any number of independent variables. It is often used to predict the likelihood of an event occurring based on past data.

Examples of linear regression applications could include predicting health outcomes based on factors such as age, weight, and medical conditions. Or, forecasting sales based on budget, competitor activities, and other previously-collected data.

2. Logistic Regression (Supervised)

Logistic regression uses binary classification to predict whether a specific outcome will occur. This ML tool is used for classification based on probabilities. It can be used in scenarios that involve anticipating if a fraudulent transaction might occur, or in marketing, it can help predict whether a customer will respond positively to an ad—any scenario where predicting binary outcomes is beneficial.

3. Artificial Neural Networks (ANN) (Reinforcement Learning)

Neural networks are designed to loosely mimic the functioning of the human brain. Units of data are arranged in a series of interconnected datasets, and nodes of data behave like the brain’s neural synapses.

Weights are assigned to connected nodes to help algorithms learn from input data. Neural networks can develop object recognition with images, words, text translations, and a seemingly endless range of contexts.

4. Decision Trees (Supervised Learning)

Decision tree ML algorithms are used for classification problems, particularly when presented with numerous classes of dataset types, each possessing different features. Decision trees are predictive models, with each node of new data branching out into virtually unlimited potential outcomes.

5. Random Forests (Supervised Learning)

Decision trees can provide numerous potential outcomes, but running a single model with too many branching outputs poses the danger of becoming overly complex and difficult to interpret.

When there are too many branches in a decision tree, random forests help improve accuracy and better outcomes by testing numerous less complex decision trees with limited datasets in the hundreds, thousands, or even millions of less extensive algorithmic groupings.

The algorithm takes a poll of this metaphorical forest of trees—all of these less-dense decision tree results—to provide extremely accurate, robust output data.

6. Isolation Forest (Unsupervised)

An isolation forest functions as an anomaly detection algorithm. This ML algorithm isolates outlier datasets using a tree-based design where a random feature is split from random values in the data trees. As anomalies in the data appear, an isolation forest makes it possible to uncover unknown anomalies, which is beneficial in cases such as complex fraud detection and network security.

7. Naïve Bayes Classifier Algorithm (Supervised)

Modeled according to Bayes’ theorem, a formula designed for determining probabilities based on different conditions, Naïve Bayes is a classification algorithm that places every input value in its own independent class. This model is unique because it produces accurate, detailed outcomes while efficiently using computational resources.

8. K-Means Clustering (Unsupervised)

K-means categorizes groups of unlabeled data, or data clusters, based on shared attributes. These predefined data clusters provide valuable insight for pattern recognition and identifying anomalies or outlier data. One of K-means’ most advantageous benefits is the algorithm’s ability to scale rapidly.

9. Support Vector Machine (SVM) (Supervised)

SVM is an algorithm used for both regression and classification. Past sample sets are used to build new models by extracting raw data of an unlimited number of features, or variables. Every value can be linked to a particular coordinate in the algorithm’s output, making SVMs powerful ML classifier algorithms.

10. K-Nearest Neighbors (KNN) (Supervised)

K-nearest neighbors algorithms are used to estimate whether a data point belongs to a specific group or classification. KNN is often implemented for pattern recognition and regression purposes. It functions by labeling a new data point based on an average of similar nearby datasets. This algorithm operates on the mathematical axiom that similar data will likely share the same label or values.

Training, or preprocessing, is needed to help KNN models retain accuracy and prevent them from factoring in inaccurate data, however, KNNs are useful for highly complex decision-making models.

11. Principal Component Analysis (PCA) (Unsupervised)

PCA is a dimensionality reduction algorithm (as described below) capable of reducing large datasets into a lower-dimension representation while maintaining valuable data about variance.

PCA is used in image processing, complex pattern recognition, and other forms of data preprocessing. It is a powerful ML algorithm used for simplifying overly complex computations to improve efficiency.

12. Dimensionality Reduction (Supervised or Unsupervised)

Dimensionality reduction is an important ML algorithm that is used for the purpose of simplifying informationally dense states into a representation that preserves important attributes of the observed phenomenon but is easier to compute. This agile algorithm is valuable to understand because it is a unique dataset-based ML system that is highly adaptable, and can be ideal for use with other algorithm methodologies, such as KNNs, cluster-based algorithms, and principal component analyses.

Dimensionality reduction can be used to preprocess datasets in supervised or unsupervised ML processes to help isolate relevant data.

Leverage Best-in-Class Machine Learning Technology with Nanotronics

Nanotronics’ deep learning and edge computing technology, AIPC™, provides manufacturers the ability to aggregate real-time data from sensors, build predictive models, detect anomalies, and enable autonomous factory control.

Learn how you can implement nControl™ alongside our suite of state-of-the-art, ML-powered products to improve your operational efficiency, uncover cost-saving insights, and maximize uptime with ease.

Get in touch with one of our experts today.